What is a POS tagging?

- a label assigned to a word in a sentence indicate its grammatical role.

Assumptions that go into a Hidden Markov Model

- Markov assumption: The current state (POS tag) depends only on the previous state.

- Output independence:The obserced word depends only on the current state(tag), not on other words or states.

- Stationary Transition and emission probabilities do not change over time.

Time complexity of the Viterbi algorithm

O(T×N2) where:

T = length of the sequence (number of words)

N = number of possible tags

Its practical for POS tagging since the number of tags (N) is small (N < 50 for most languages)

Example

Answer

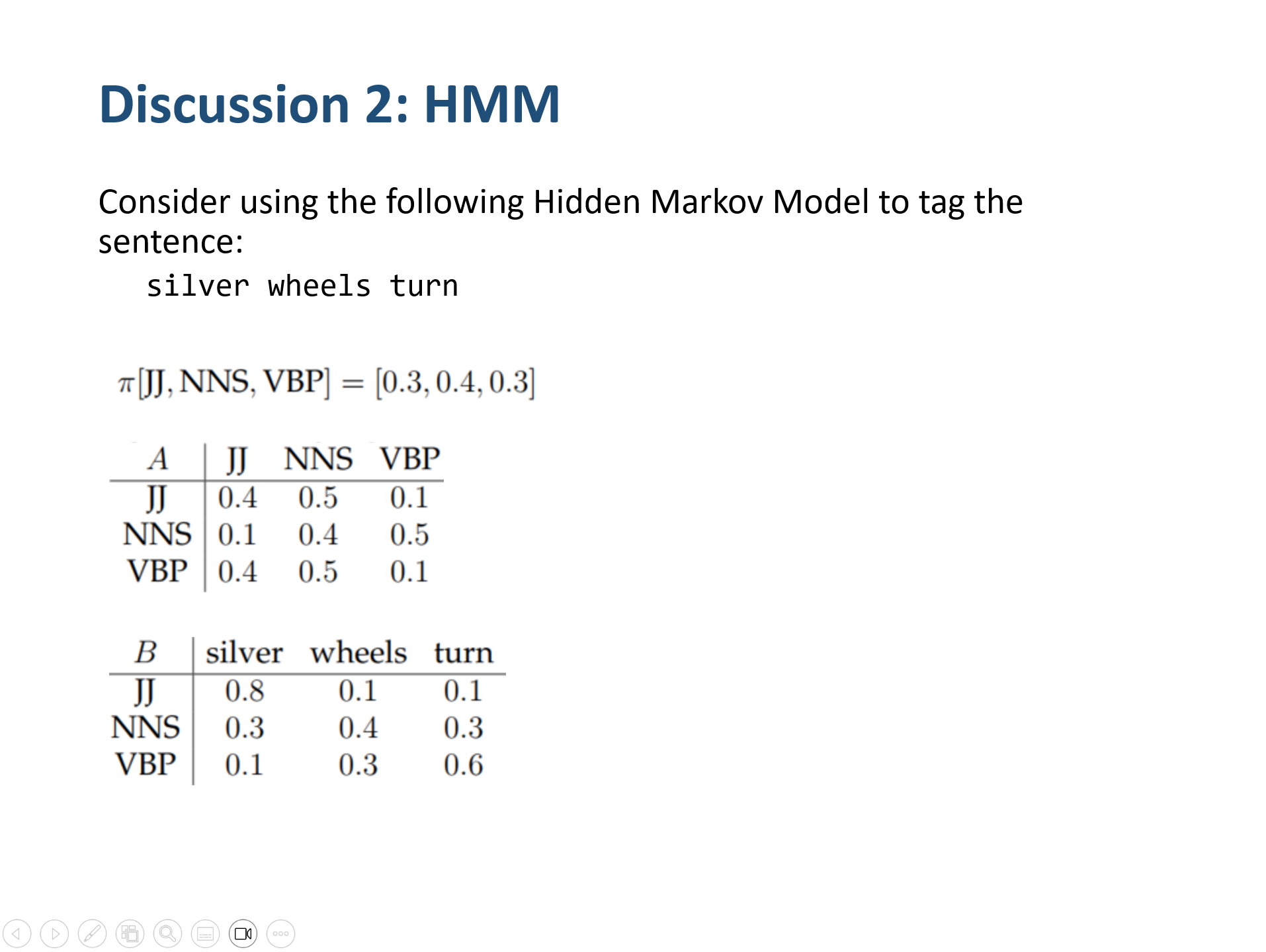

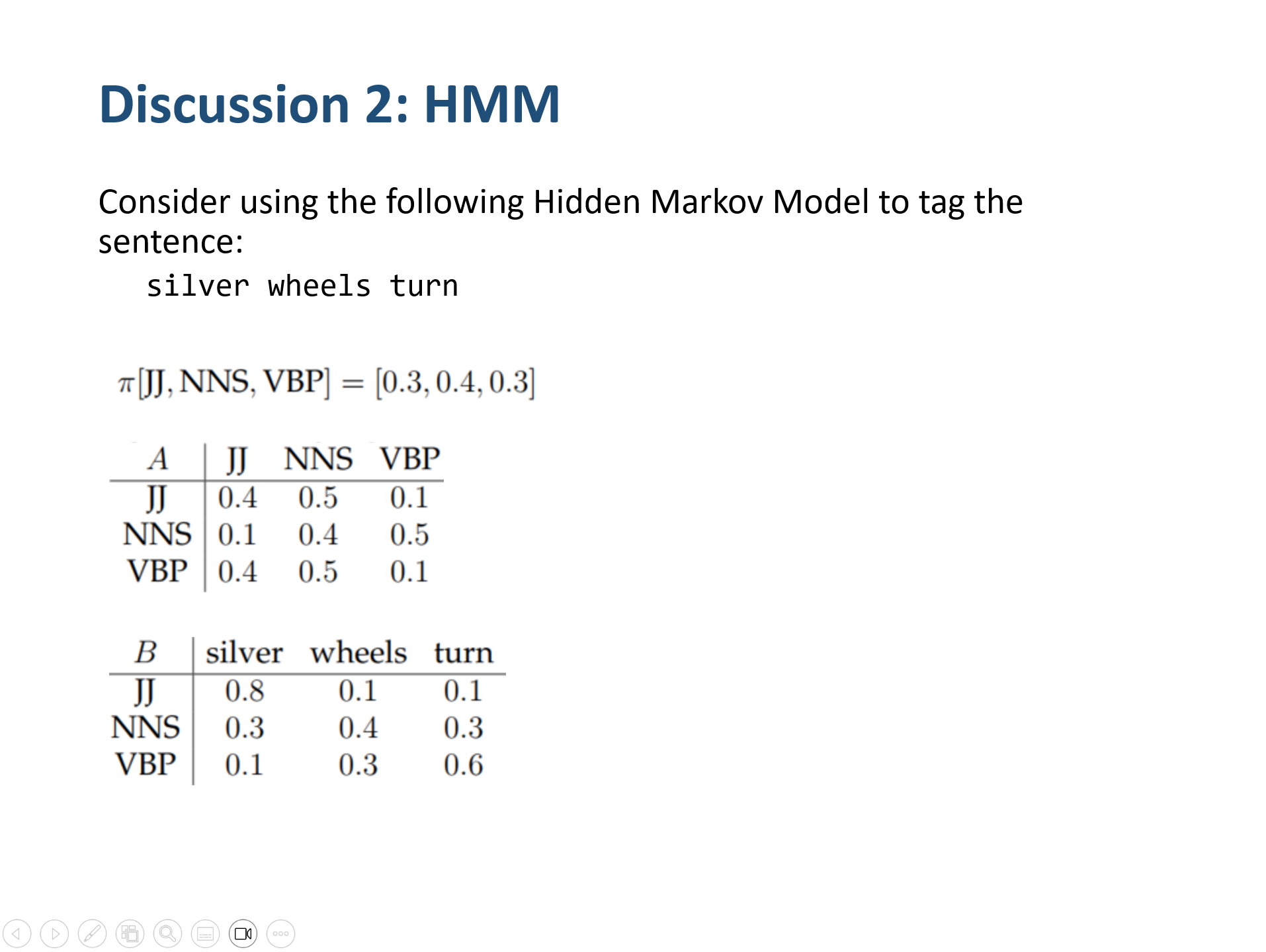

Initial state distribution:

π(JJ)=0.3,π(NNS)=0.4,π(VBP)=0.3

The transition probability matrix:

A=p(JJ∣JJ)p(JJ∣NNS)p(JJ∣VBP)p(NNS∣JJ)p(NNS∣NNS)p(NNS∣VBP)p(VBP∣JJ)p(VBP∣NNS)p(VBP∣VBP)=0.40.10.40.50.40.50.10.50.1

The emission probability matrix (with the observed vocabulary {silver, wheels, turn}) is:

p(silver∣JJ)=0.8,p(wheels∣JJ)=0.1,p(turn∣JJ)=0.1,p(silver∣NNS)=0.3,p(wheels∣NNS)=0.4,p(turn∣NNS)=0.3,p(silver∣VBP)=0.1,p(wheels∣VBP)=0.3,p(turn∣VBP)=0.6.

Viterbi Algorithm for “silver wheels turn”

Let the observation sequence be

O=(silver,wheels,turn),

and let the hidden state sequence be

(s1,s2,s3)∈{JJ,NNS,VBP}3.

Initialization (t=1, word “silver”)

δ1(JJ)δ1(NNS)δ1(VBP)=π(JJ)×p(silver∣JJ)=0.3×0.8=0.24,=π(NNS)×p(silver∣NNS)=0.4×0.3=0.12,=π(VBP)×p(silver∣VBP)=0.3×0.1=0.03.

Step 2: Recursion (t=2, word “wheels”)

δ2(s)=s′∈{JJ, NNS, VBP}max[δ1(s′)×p(s′→s)]×p(wheels∣s).

1. δ2(JJ)

δ1(JJ)×p(JJ→JJ)×p(wheels∣JJ)=0.24×0.4×0.1=0.0096δ1(NNS)×p(NNS→JJ)×p(wheels∣JJ)=0.12×0.1×0.1=0.0012δ1(VBP)×p(VBP→JJ)×p(wheels∣JJ)=0.03×0.4×0.1=0.0012

Choose the max value 0.0096, from JJ→JJ

δ2(JJ)=0.0096

2. δ2(NNS)

0.24×0.5×0.4=0.0480.12×0.4×0.4=0.01920.03×0.1×0.4=0.006

Choose the max value 0.048 , from JJ→NNS

δ2(NNS)=0.048

3. δ2(VBP)

0.024×0.1×0.3=0.00720.12×0.5×0.3=0.0180.03×0.1×0.3=0.0009

Choose max Value 0.072, from NNS→VBP

Step 3: Recursion (t=3, word “sliver”)

Same as previous one

δ3(s)=s′∈{JJ, NNS, VBP}max[δ2(s′)×p(s′→s)]×p(turn∣s).

δ3(JJ)=0.00072δ3(NNS)=0.00576δ3(VBP)=0.0144

Step 4 Termination and Backtracking

At t=3, we compare:

δ3(JJ)=0.00072,δ3(NNS)=0.00576,δ3(VBP)=0.0144

The highest probability is 0.0144 for state VBP. Backtracking the recorded predecessor states:

- At t=3, the best state is VBP.

- At t=2, the best transition leading to VBP came from NNS.

- At t=1, the best transition leading to NNS came from JJ.

which corresponds to the following tagging:

- silver → JJ

- wheels → NNS

- turn → VBP