An SVM is a linear binary classifier: choosing parameters means choosing a separating boundary (hyperplane).

SVMs aim to find the separation boundary that maximises the margin between the classes.

A hyperplane is a decision boundary that differentiates the two classes in SVM.

- Hyperplane: a hyperplane is a flat hypersurface, a subspace whose dimension is one less than that of the ambient space.

The hyperplane of the decision boundary in Rm is defined as

wTx+b=0

- w: a normal vector perpendicular to the hyperplane

- b : the bias term

The distance from the 𝑖-th point to a perfect boundary is

∥ri∥=∥w∥yi(w′xi+b)

- The margin width is the distance to the closest point

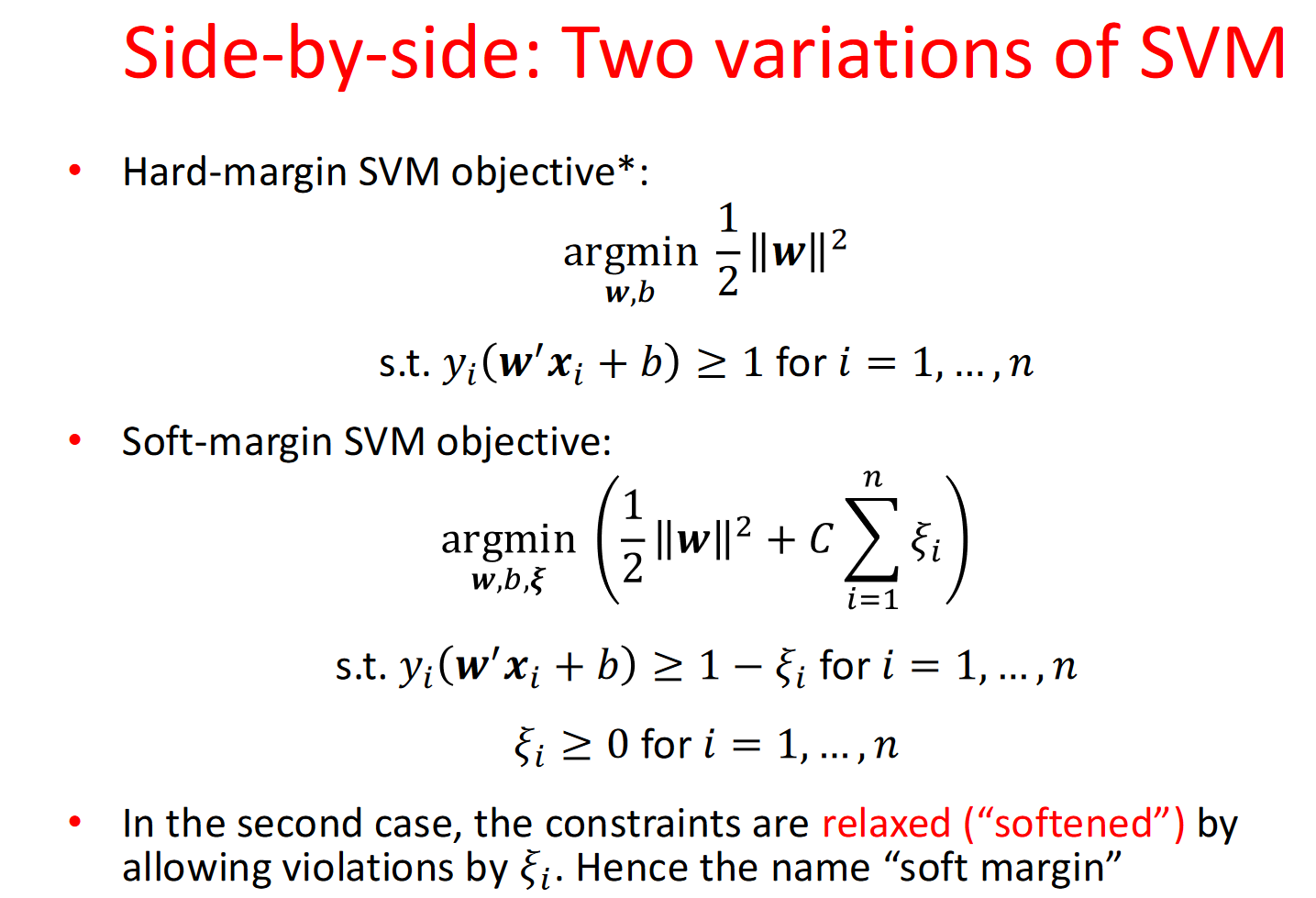

Hard-margin SVM objective

SVMs aim to find:

argw,bmin21∥w∥2

- factor of 21 is for mathematical convenience

Subject to constraints:

yi(w′xi+b)≥1 for i=1,…,n

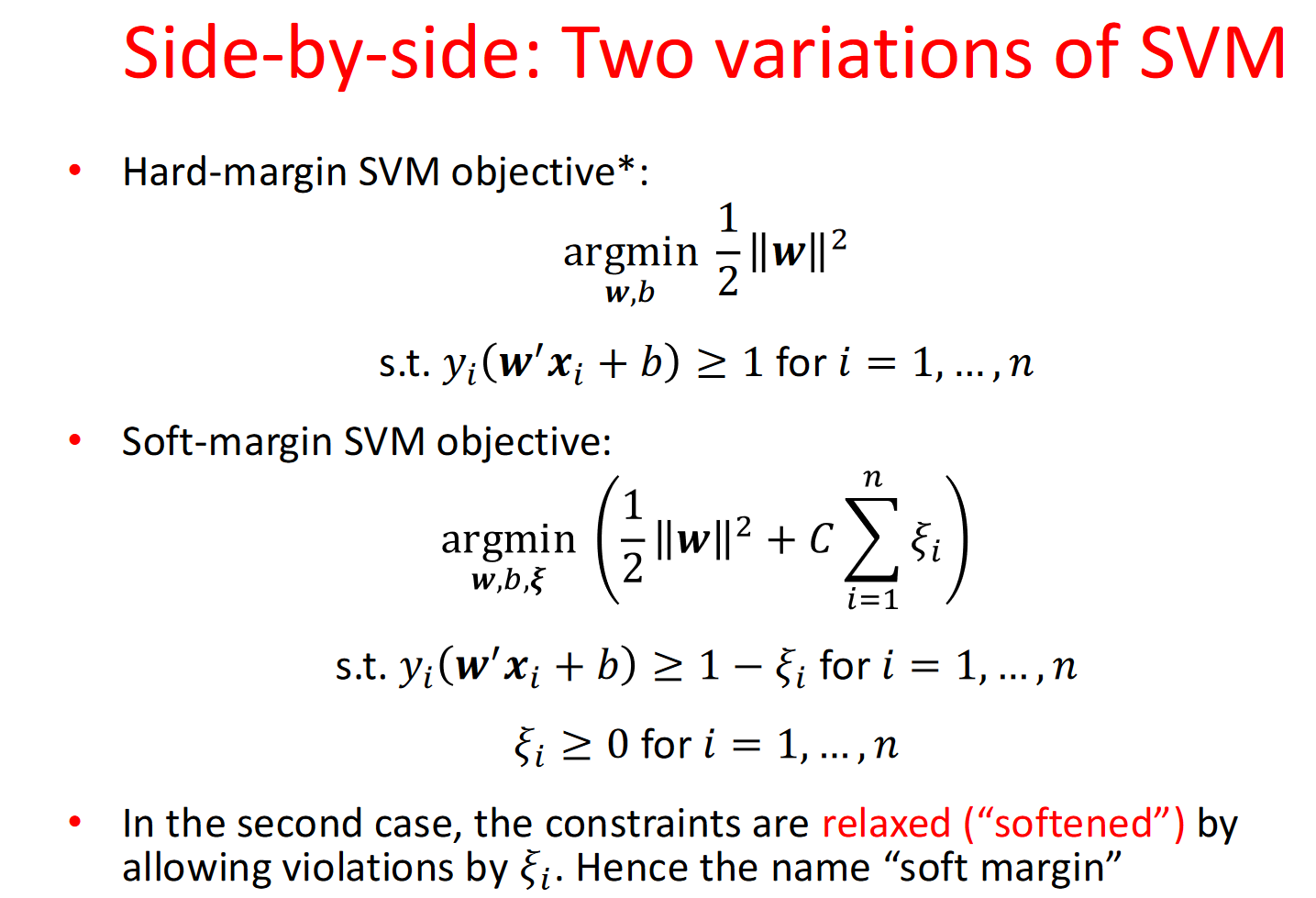

Soft-margin SVM

- Soft-margin SVM objective:

w,b,ξargmin21∥w∥2+Ci=1∑nξi

- ξi: slack variable

Subject to constraints:

ξi≥1−yi(w′xi+b) for i=1,…,nξi≥0 for i=1,…,n

Kernel Methods

The primal problem of SVM is a convex optimaztion problem. We can utilize Lagrangian and Duality to solve the problem in a more easy way.

Lagrangian multipliers:

- Transform to unconstrained optimisation

- Transform primal program to a related dual program, alternate to primal

- Analyse necessary & sufficient conditions for solutions of both programs

primal program : minxmaxλ≥0,vL(x,λ,v)

dual program : maxλ≥0,vminxL(x,λ,v) (Easier to compute!)

Karush-Kuhn-Tucker Necessary Conditions

- Primal feasibility : gi(x)≤0 and hj(xi)=0. The solution x∗ must satisfy the primal consrtraints.

- Dual feasibility : λ≥0 for i=1,…,n. The Lagrange multiplier λi∗ must be non-negative

- Complementary slackness : λi∗gi(x∗)=0, for i=1,…,n. For any inequality constriant, the product of the Lagrange multiplier and the constraint function must be zero.

- Stionary : ∇xL(x∗,λ∗,ν∗)=0. The gradient of the Lagrangian with respect to x must be zero at the optimal solution

Hard-margin SVM with The Lagrangian and duality

L(w,b,λ)=21w′w+i=1∑nλi−i=1∑nλiyixi′w−i=1∑nλiyib

Applying the staionary contion in KKT:

∂b∂L=−i=1∑nλiyi=0→New constraint, Eliminates primal variable b∇wL=w∗−i=1∑nλiyixi=0→Eliminates primal variable w∗=i=1∑nλiyixi

Then we make the problem moew easier! Here is:

Dual program for hard-margin SVM

λargmaxi=1∑nλi−21i=1∑nj=1∑nλiλjyiyjxi′xjs.t.λi≥0 and i=1∑nλiyi=0

Making predictions with dual solution

s=b∗+i=1∑nλi∗yixi′x

For lagrangian problem:

If λi=0 : The sample xi is does not impact the decision boundary.

For lagrangian problem in soft-margin SVM the slack variable ξ:

- if ξ<0, which is theoretically impossible, as ξ represent the deviation from the margin and is defined to be non-negative, i.e., ξ≥0

- ξ=0 : The variable is correctly classified and lies either outside the margin or exactly on the margin boundary.

- 0<ξ<1 : The sample lies within the margin but is still correctly classified.

- 1<ξ<2 : The sample is misclassified, but the distance to the hyperplane does not exceed the opponent's margin.

- ξ≥2 : The sample is severely misclassified and is more than one margin width away from the hyperplane.

Soft-margin SVM’s dual

The function for Sofr-Margin is similar. The difference is the constraint of λ

λargmaxi=1∑nλi−21i=1∑nj=1∑nλiλjyiyjxi′xjs.t. C≥λi≥0 and i=1∑nλiyi=0

Kernelising the SVM

A stragety for Feature space transformation

- Map data into a new feature space

- Run hard-margin or soft-margin SVM in new space

- Decision boundary is non-linear in original space

xi′xj→ϕ(xi)′ϕ(xj)

Polynomial kernel

k(u,v)=(u′v+c)d

Radial basis function kernel

k(u,v)=exp(−γ∥u−v∥2)

Kernel trick

In machine learning, the kernel trick allows us to compute the inner product in a high-dimensional feature space without explicitly mapping data points to that space.

For two data points xi and xj :

xi=[xi1xi2],xj=[xj1xj2]

The polynomial kernel function defines an inner product in the original space as:

K(xi,xj)=(xi⋅xj+1)2

Expanding the polynomial:

K(xi,xj)=(xi1xj1+xi2xj2+1)2=xi12xj12+xi22xj22+2xi1xj1xi2xj2+2xi1xj1+2xi2xj2+1

This result is equivalent to the inner product in a higher-dimensional space without explicitly computing the high-dimensional mapping.