AI Planning

Classical Planning

State Model

- Finite and discrete state space

- a known initial state

- a set of goal states

- actions applicable in each

- a deterministic transition function for

- positve action cost

Goals states can be more than one

Search Algorithm

- b: maximal branching factor

- d: goal depth

- m: the maximal depth reached

Breadth First Search Data structure: FIFO (Queue)

Breadth First Search explores node level by level, and a queue (FIFO) allows nodes to be processed in the order that they are discovered, which ensures all nodes at a given depth are expanded before moving to the next level.

Depth first Search

Data structure: LIFO (Stack)

Depth FiRst Search explores as deep as possible along each branch before backtracking, and a stack (LIFO) enables this by always processing the most recently discovered node that still has unexplored successors.

Uniform Cost Search

Data structure: Priority Queue

Uniform Cost Search expands nodes based on t6he lowest cumulaitve cost, and a priority queue efficiently retrieves the node with the minimal cost at each step, allowing UCS for finding the optimal path.

| DFS | BFS | ID | A* | HC | IDA* | |

|---|---|---|---|---|---|---|

| Complete | No | Yes | Yes | Yes | No | Yes |

| Optimal | No | Yes* | Yes* | Yes | No | Yes |

| Time | ||||||

| Space |

A search algorithm is considered complete if it guarantees to find a solution, if one exists.

BFS and IDS are optimal only all nodes (edges) has equal costs(uniform).

Astar Search

: The actual cost from the initial state to the current state

: The heuristic value from the current state to goal state

Actual optimal value from current state s to goal state

Property of heuristic

- Admissble: never over-estimate. If for all

- Safe: for all

- Goal aware: for all

- Consistent:

consistent + goal aware admissible

admissible goal aware + safe

Optimality for A star search

- A star search without re-open mechanism requires both consistent and admissible to ensure finding an optimal path.

- With re-open mechanism, it only requies admissible

Example for a non-optimal solution by inconsistent but adimissble for A* search without re-open nodes.

(8)

A

/ \

+1 / \ +3

/ \

B ----- C ----- D

(7) +1 (0) +6 (0)

Reference: Why does A* with admissible non consistent heuristic find non optimal solution?

Greedy best-first search

- Using priority queue expand node by h(s)

- Completeness: For safe heuristic, Yes

- Optimality: No

STRIPS And Relaxation

A STRIPS problem P = : Propositions, Initial state, Goal, Actions.

h+: The Optimal Delete Relaxation Heuristic

Default STRIP model: s' = s + add(s) - del(s)

after delete relaxation: s' = s + add(s)

Defination

如果目标大于1: 等于每个小目标的 之和

Defination

如果目标大于1: 等于每个小目标的 的最大值

和 :如果一个动作有多个precondition,则视为多个g:

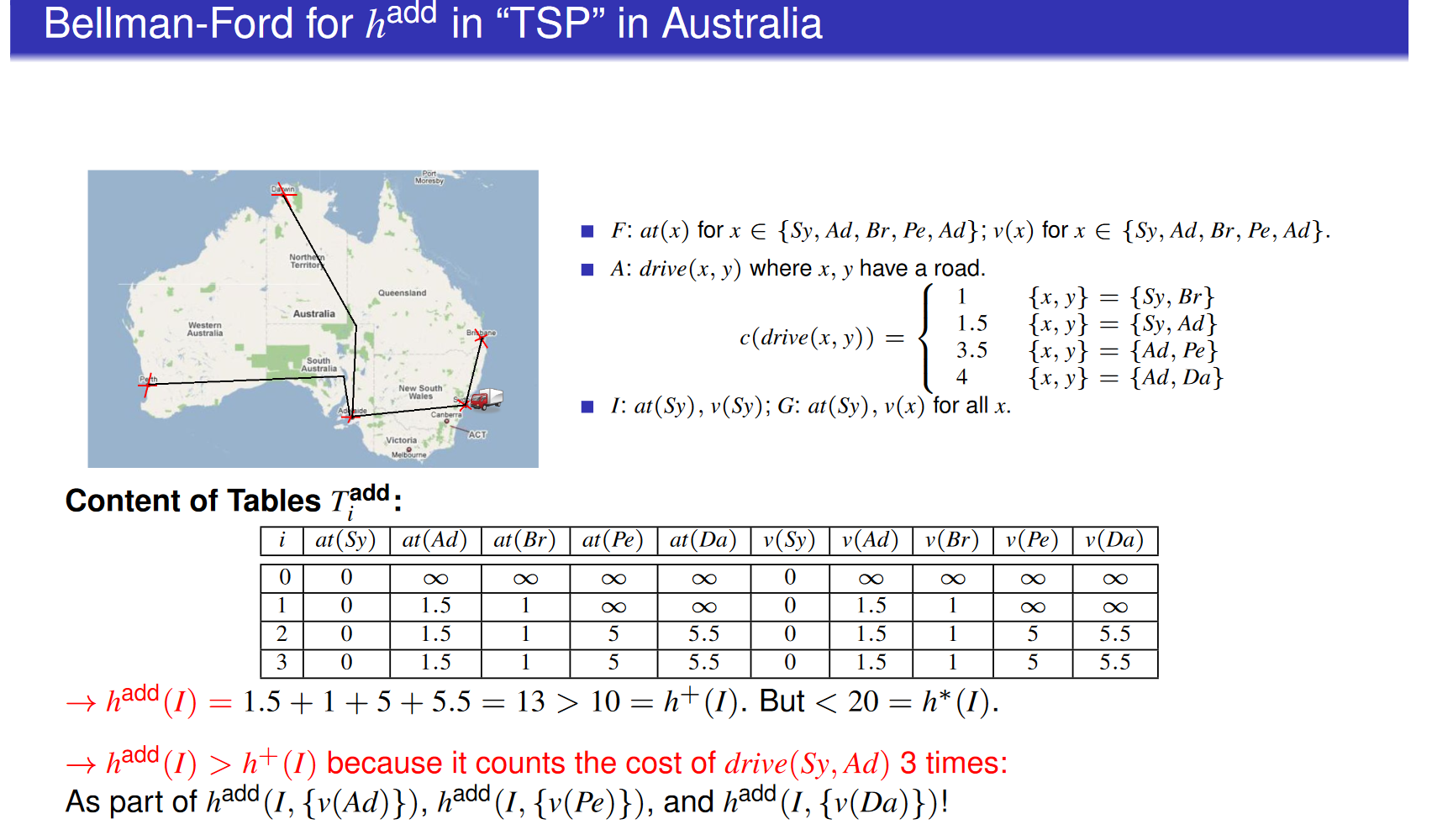

Bellman-Ford Table

is admissible, but is typically far too optimistic

is not admissible, but is typically a lot more informed than

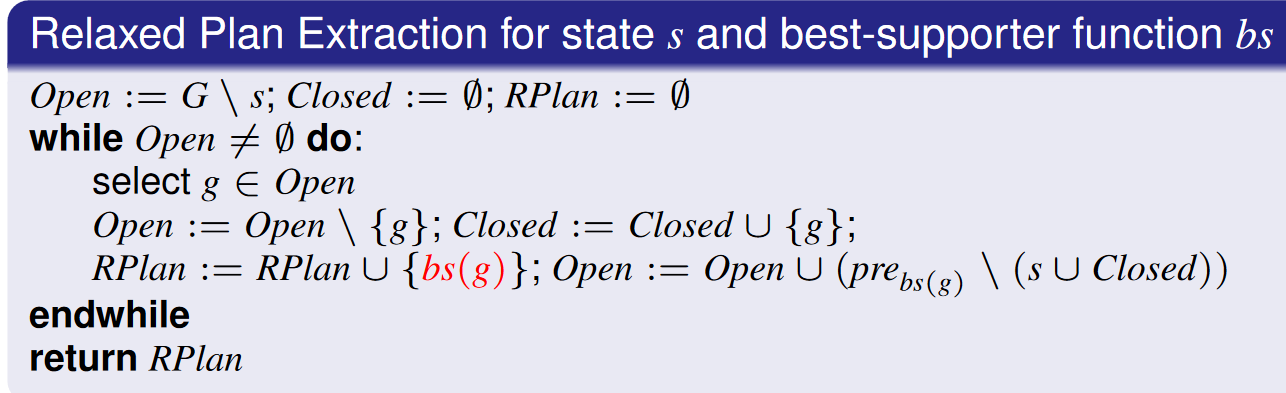

Relaxed Plan Extraction

A heuristic function is called a relaxed plan heuristic, denoted , if, given a state , it returns if no relaxed plan exists, and otherwise returns where is the action set returned by relaxed plan extraction on a closed well-founded best-supporter function for .

- Plan set means no duplicate actions.

- never over count sub plans due to

- may be inadmissible, just like , but for more subtle reasons.

- In practice, typically does not over-estimate

For relaxed plan extraction to make sense, it requires a closed well-founded best-supporter function:

The supporter function:

The supporter function:

Proposition : ( is Pessimistic and Agrees with on ). For all STRIPS planning tasks , for all states, if and only if . There exists STRIPS planning tasks and so that .

Width-based search

novelty w(s)